PaLM was Google’s answer to GPT, and with the release of Google PaLM 2, it’s taking a significant leap, but do you know how PaLM 2 fares to its predecessor?

Google PaLM 2 is a natural language processing that uses 5x more text data than PaLM. However, it is a smaller model with more efficient techniques and only 340 billion parameters, making it lighter than its predecessor.

This guide will tell you how Google PaLM 2 exceeds its predecessor PaLM and the unique features you can unlock.

What Is Google PaLM?

Google PaLM (Pathways) is a large-scale language model (LLM) developed by Google Research in 2022.

In fact, it was inspired by the success of models like OpenAI’s GPT-3 (Generative Pre-trained Transformer) to create an advanced language model.

Therefore, Google designed PaLM to compete with GPT technology to understand and generate natural language text, including machine translation, text summarization, question answering, and more.

Pathways is an asynchronous distributed dataflow for machine learning.

This innovative system allows simultaneous operation by dividing tasks into smaller pieces and letting them do many things simultaneously.

Watch the video to learn more about Pathways,

It would not be wrong to say that it’s an advancement on Google’s previous LLMs GLaM, LaMDA, Gopher, and Megatron-Turing NLG.

However, it is trained on vast text data from the internet and boasts 540 billion parameters, unlike on-going Google’s LLM projects.

Here are a few features of Google PaLM.

- Language Understanding and Generation: It is designed for multiple Natural Language Processing tasks, including distinguishing cause and effect and comprehending conceptual combinations.

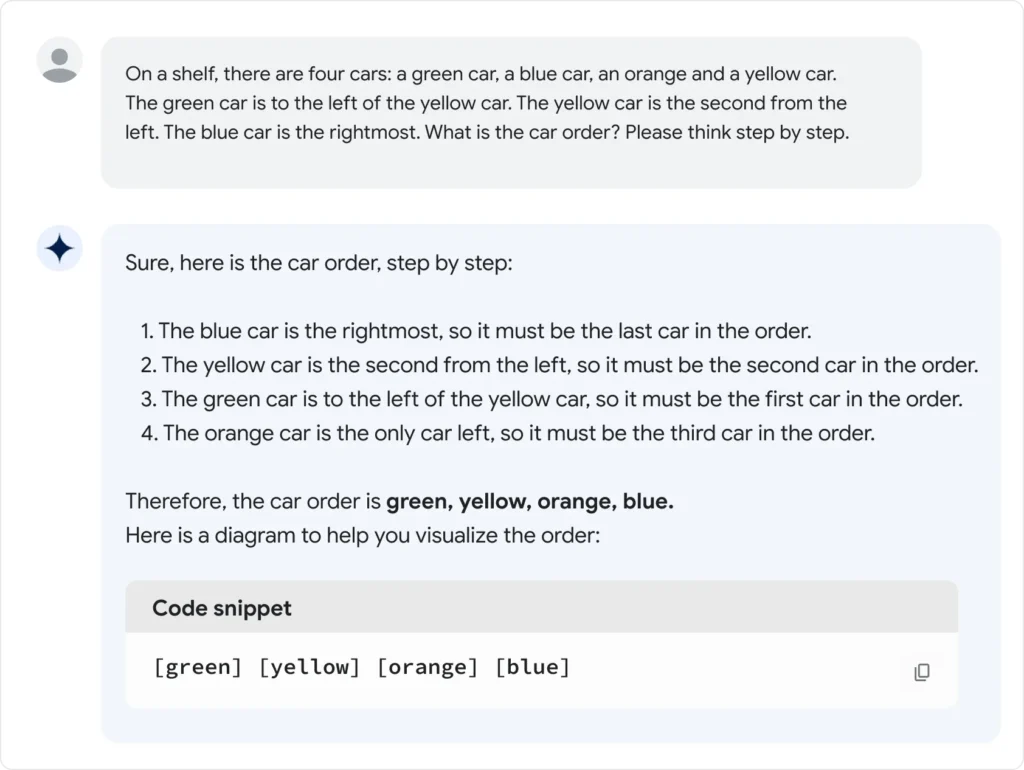

- Reasoning: Using chain-of-thought prompting, it can carry out multi-step arithmetic or common-sense reasoning.

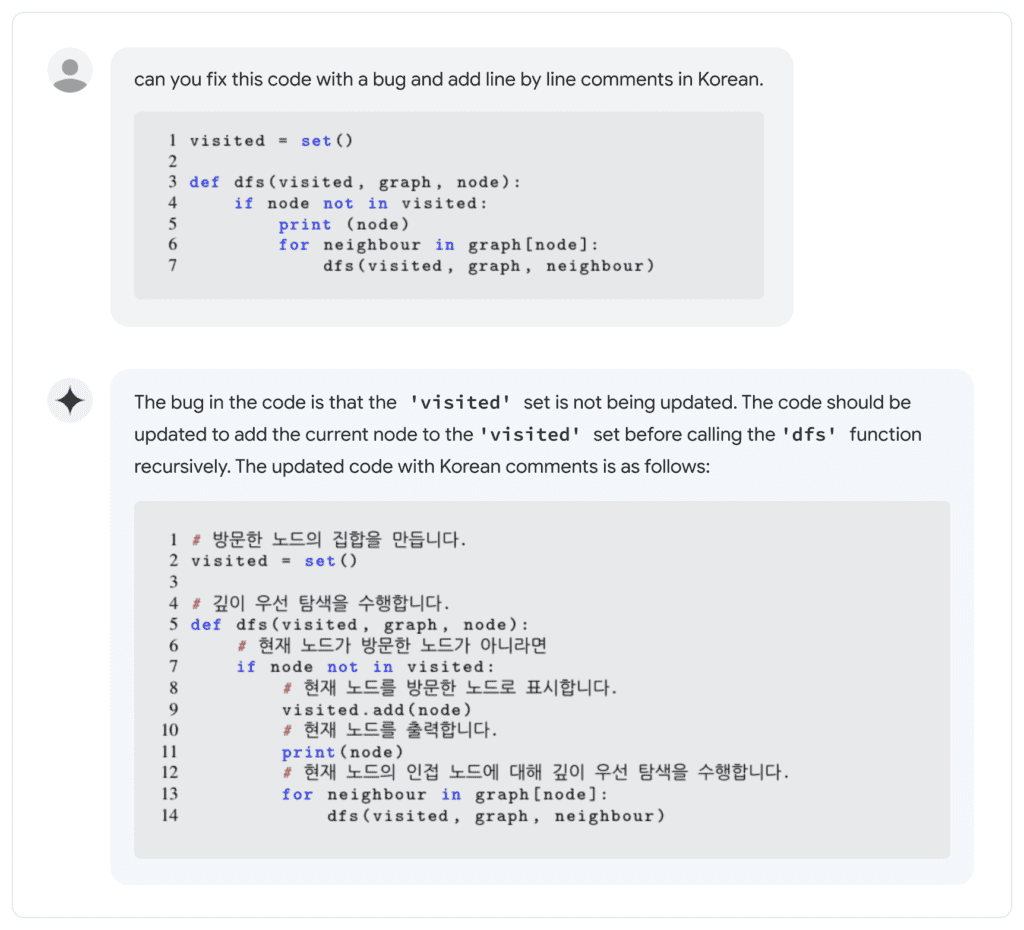

- Code Generation: It can write codes, translate code from one language to another, and fix compilation errors.

- Applications: The typical applications include content creation, virtual assistants, text analysis, personalized recommendations, and language understanding research.

What Is Google PaLM 2?

Google PaLM 2 is a next-generation language model that builds on its predecessor PaLM: Pathways, released by Google Research in 2023.

What sets PaLM 2 apart from PaLM is its ability to reason tasks more efficiently and quickly, including coding and mathematics, classification and question answering, translations, and multilingual proficiency.

However, unlike PaLM, it is only trained on 340 billion parameters and five times more text data from the internet, making it robust yet light and easy to deploy.

In fact, it boasts improved capabilities over GPT-3 and 4 models and is available in smaller sizes.

Here is what is unique about PaLM 2.

- Pre-Training Data: Google removed sensitive, personally identifiable information and filtered duplicate texts to reduce memorization.

- New Capabilities: It demonstrates improved multilingual ‘toxicity’ classification capabilities to reduce improper answer generation.

- Evaluations: It is evaluated for potential harms and bias across various downstream uses, including toxic language harms and social prejudices.

Google’s recently launched Chatbot, Google BARD, is built on the PaLM 2 model, which is still a BETA version of what the app can do.

Furthermore, other popular applications include Med-PaLM 2 and Sec-PaLM, medical text, and cybersecurity analysis.

Users can also access PaLM 2 LLM model through Vertex AI, Google’s cloud services under one roof, by paying a subscription fee.

Therefore, PaLM 2 is more advanced, quicker, and versatile than its predecessor.

Google PaLM Vs. PaLM 2 – Similarities

Google PaLM 2 is not very different from its predecessor because it was built on the existing technology with some fine-tuning.

Here are five stark similarities between the two of Google’s most prominent LLM technologies.

1. Large-Scale Training

Google’s language models, the PaLM series, require extensive training on large datasets to learn language patterns and relationships.

Google’s Blog cites that both language models are trained on a large corpus of text data, such as websites, blogs, news, programming languages, etc.

The first version of PaLM was trained on 780 billion tokens and 540 billion parameters, while PaLM 2 is conditioned on 3.9 trillion tokens and 340 billion parameters. Google PaLM parameters contain Arithmetic, Summarization, Question and Answering, etc. (Source: AI.GoogleBlog)

2. Research And Innovation

Google’s primary intention in building language models is to represent ongoing research and innovation in natural language processing.

Similar to PaLM AI, which was based on earlier LLM models, PaLM 2 is hugely based on PaLM and ongoing LLMs like Glam, LaMDA, Gopher, etc.

For now, you can only use the Beta phase of Bard and particular features in Vertex AI, which is based on PaLM 2.

3. Versatile Applications

Google’s language models are designed to apply to a wide range of ML and natural language processing tasks.

Therefore, PaLM can provide efficient text classification, named entity recognition, sentiment analysis, language translation, question answering, etc.

4. Compute-Optimal Scaling

PaLM and PaLM 2 utilize compute-optimal scaling, achieving optimal performance and efficiency by effectively using computational resources as the system scales or expands.

Moreover, the scaling ensures that the computational resources are efficiently utilized as the system grows while minimizing bottlenecks.

5. Transformer Architecture And TPU v4

These LLMs are based on transformer architectures, enabling efficient text processing and capturing long-range dependencies.

At the hardware level, both LLMs are trained over two TPU v4 pods, using 3,072 TPU v4 chips in each pod attached to 768 hosts, probably the most extensive TPU configuration described to date.

Google PaLM Vs. PaLM 2 – Major Differences

Although similar technology, Google PaLM and PaLM 2 share some major differences.

PaLM 2 is built upon PaLM and existing Google LLM technologies; therefore, it is quite an advanced model.

Let’s discuss how PaLM 2 fares to its predecessor and whether it differs.

| Features | PaLM | PaLM 2 |

|---|---|---|

| Pre-training data | It is trained on 780 billion tokens and 540 billion parameters. | It is trained on 100 languages, 3.6 trillion tokens, and 340 billion parameters. |

| Compute-optimal Scaling | PaLM is a larger model than PaLM 2. | PaLM 2 has adopted technique that makes it smaller. |

| Availability of Technology | It has limited applications and usability. | It has multiple applications, including 25 features and products, including Bard. |

| Multilingualism | It support less language as it is an older model. | It supports upto 100 languages, including many codes. |

| Evaluations and New Capabilities | It is an outdated architecture with many bottlenecks. | It is an improved architecture with new capabilities. |

1. Pre-Training Data

PaLM 2 is more powerful than any existing model, including GPT-3 and Google’s PaLM.

It boasts training in 100 languages, 3.6 trillion tokens, and 340 billion parameters.

PaLM, conversely, boasts training in half of the languages with only 780 billion tokens.

It was trained on 540 billion parameters as foundational LLM technology, significantly more than the latest PaLM 2.

2. Use Of Compute-Optimal Scaling

Although both LLMS have training scaled using Compute-optimal scaling, PaLM 2 has adopted a technique that makes it smaller than PaLM.

It makes the model more efficient with the overall performance by providing faster inference, fewer parameters to serve, and a lower serving cost.

Therefore, adapting PaLM 2 is more feasible and cheaper than PaLM.

3. Availability Of Technology

PaLM 2 has already powered 25 features and products, including Bard and Vertex AI.

It was released a year after PaLM’s arrival, making PaLM’s applications limited and short-lived.

Moreover, PaLM 2 is available in four smallest to largest sizes: Gecko, Otter, Bison, and Unicorn.

4. Evaluations And New Capabilities

PaLM 2 has an improved architecture that can evaluate potential harms and biases that may arise during dialog, classification, translation, and question-answering.

It also boasts enhanced multilingual toxicity classification capabilities.

You can use a built-in control to limit toxic answer generation, which fits perfectly into AI’s ethical concerns.

5. Multilingualism

PaLM 2 has extensive training in a diverse range of multilingual text, encompassing over 100 languages.

It helps produce enhanced skills for comprehending, creating, and translating complex textual content, including idioms, poems, coding, and riddles.

Due to various limitations, PaLM is less likely to handle complex textual tasks.

Continue reading to discover the similarities and differences between ChatGPT Vs. Google Bard and ChatGPT Vs. Google Bert.

The Bottom Line

Although Bard is still developing, PaLM 2 is quite a powerful LLM tool for Chatbot services.

It can be a valuable tool for coders as it excels at popular programming languages like Python and JavaScript, including generating specialized code in languages like Prolog, FORTRAN, and Verilog.

Check how PaLM 2 can help ease your work by signing up for Vertex AI and Bard.

Continue reading to find out what makes LLaMA different from Alpaca AI.